Designing secure, resilient digital products in an AI-driven world.

The last few years marked the moment artificial intelligence moved from theory to practice. In 2025, AI became a daily tool for developers, designers, marketers, and operations teams alike. By 2026, the conversation is no longer "Should we use AI?" but "How do we use it safely, strategically, and sustainably?"

For web-based technologies and digital products, this shift is profound. AI is changing how software is built, how teams work, how data is handled, and how risk is managed. The organizations that thrive in 2026 will not be the ones using the most AI — but those using it deliberately.

Opportunities to do more with less

McKinsey's global AI survey showed high-performing organizations rethought workflows entirely — redesigning how work gets done rather than just using AI to augment old processes — a major contributor to measurable business value.

The productivity gains are real. GitHub's research found that developers using Copilot completed tasks 55% faster, with the biggest gains in repetitive or boilerplate-heavy work.

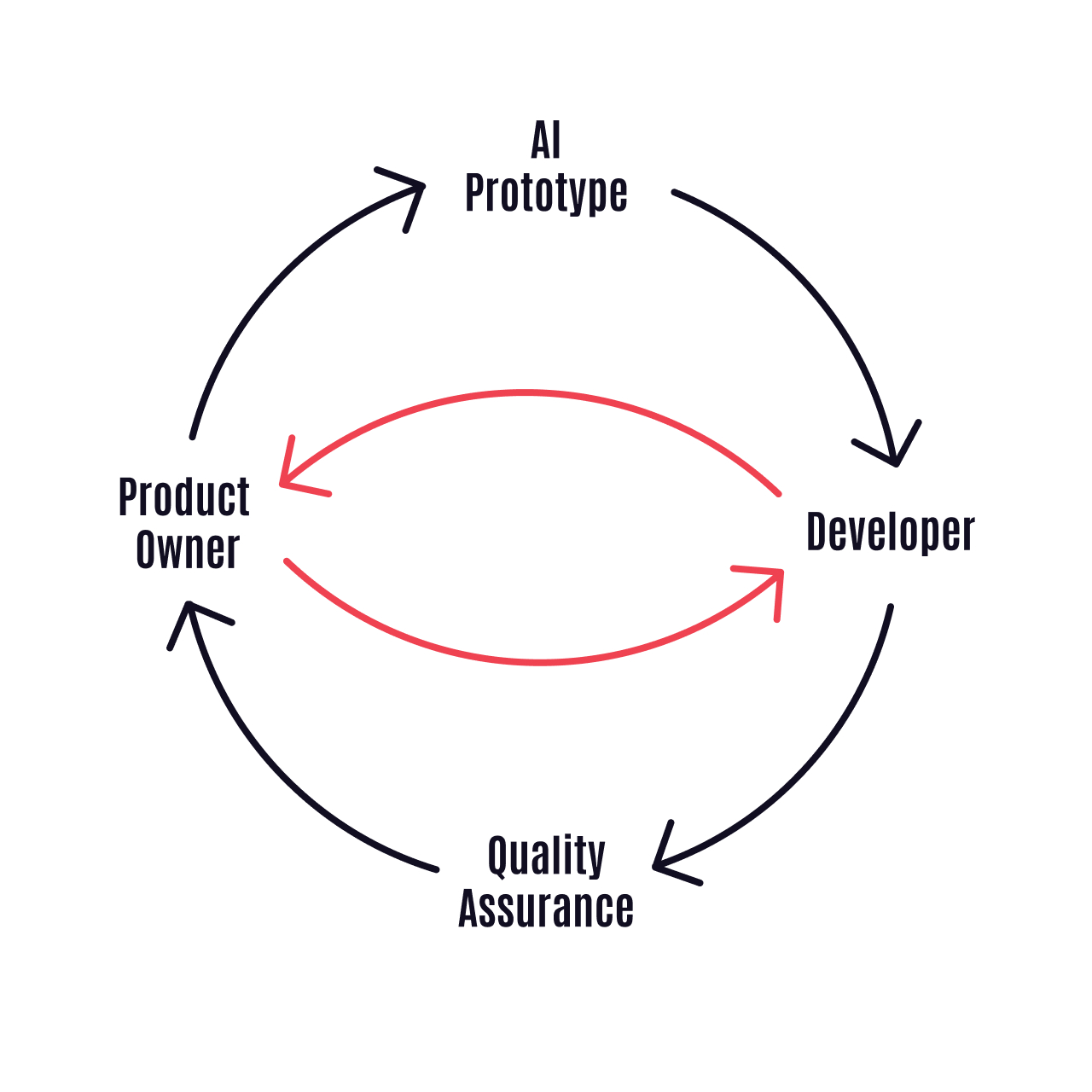

One of the biggest opportunities AI has created is the consolidation of roles across product teams, resulting in squads becoming leaner and more capable. Responsibilities that once required hand-offs between design, engineering, QA, and operations are increasingly handled within smaller, cross-functional groups — supported by AI.

Meanwhile, stakeholders and product owners are engaging directly with AI tools such as Figma Make, Bolt, and Lovable to try ideas rapidly in interactive environments. Teams get faster feedback loops without creating wasteful prototype branches or long review cycles.

Responsibilities shift, but ownership is critical for security

Smaller, more general teams are great for velocity, but without depth of expertise and ownership, products become hard to scale — and worse, are more vulnerable to security risks. Shipping AI-aided features without ownership is guaranteed to result in an increase in the number of incidents and the time taken to resolve them. DORA's State of DevOps research consistently shows that teams with clear ownership and well-defined responsibilities resolve incidents faster and deploy more reliably.

This creates a paradox: the same efficiency gains that make AI-assisted development attractive also introduce blind spots. When everyone can ship, who ensures what's shipped is safe?

Security is no longer just a technical concern — it's a design concern

When producing production-ready products with AI-augmented teams, design decisions are fundamental to success.

- Well-thought-out architecture is imperative for creating clear, bounded contexts for features to be built with AI.

- Strict separation of concerns to keep your product DRY and consistent.

- A code review process that encompasses both human and AI reviews. The team needs to be familiar with everything that is shipped.

Teams need to be confident what they ship is safe

In addition to threats becoming more sophisticated, opportunities for malicious actors to hijack products and machines have increased due to prompt injection.

In the case of the Shai-Hulud worm — a September 2025 npm supply-chain attack — the malicious package contained a prompt designed to act as a security tester that would farm and exfiltrate credentials. Given the nature of the prompt, it evaded traditional security scans and managed to infect 180+ packages.

But supply-chain attacks are only part of the picture. 2025 brought a wave of incidents that exposed how unprepared many organisations were.

- Analysts reported a sharp increase in phishing attacks attributed in part to generative AI tools enabling more convincing social-engineering campaigns.

- Reports showed employees frequently exposed sensitive data through AI chat tools without realizing the risk, with generative AI interactions containing corporate secrets or source code.

- European researchers highlighted how AI-powered chatbots on customer platforms could introduce vulnerabilities — not through direct exploitation, but through flawed input validation and generative context misuse.

- A zero-click vulnerability in Microsoft 365 Copilot allowed attackers to embed hidden prompts in emails. When Copilot processed the message, it executed malicious instructions and exfiltrated sensitive corporate data — without any user interaction.

These incidents — from supply-chain breaches to employee data exposure — show the real impact of rapid AI adoption without governance. The presence of AI in workflows has highlighted gaps in how data, prompts, and outputs are treated. For example:

- AI tools can inadvertently leak sensitive data if employees input proprietary information into public APIs without restrictions.

- Research by Veracode found that AI-generated code introduced security vulnerabilities in 45% of cases — and when given a choice between secure and insecure methods, AI models often chose the insecure option.

- AI models themselves have vulnerabilities, such as prompt injection and data exfiltration risks identified in large language models, which can create new classes of attack if not properly designed and defended against.

In other words, AI isn't inherently unsafe — but it introduces new attack surfaces that traditional security practices weren't designed to handle without adaptation.

Using LLMs safely in corporate environments

By 2026, most organisations are using large language models — whether formally approved or not. The risk is no longer use, but uncontrolled use.

Practical guardrails for corporate LLM use

Organisations should define:

- Approved tools versus prohibited consumer tools

- Data classification rules for prompts and outputs

- Audit and logging expectations

- Clear ownership of AI-generated artefacts

- Human review checkpoints for customer-facing or production outputs

The most common failure mode is not malicious intent — it's accidental data exposure through well-meaning experimentation. Consider the engineer who pastes production logs into ChatGPT to help debug an issue, not realising those logs contain customer PII. Or the sales team member who uploads a contract to "summarise the key terms" — inadvertently exposing deal structures to a third-party API.

LLMs commonly approved for corporate use

While approval depends on internal policy and jurisdiction, many organisations are standardising on:

- ChatGPT Enterprise / Business (private data handling, no training on customer inputs)

- Microsoft Copilot (M365 & Azure OpenAI Service) (enterprise identity, compliance alignment)

- Google Gemini for Workspace (corporate tenancy controls)

- Anthropic Claude for Enterprise (strong privacy and safety posture)

The key distinction is enterprise agreements, not model capability. Governance lives in contracts, access controls, and integration design.

The regulatory landscape is catching up

Organisations should also be aware that governance is no longer optional. The EU AI Act is now in force, requiring risk assessments and transparency for certain AI systems. The NIST AI Risk Management Framework provides a structured approach for US-based teams. And ISO/IEC 42001 offers an international standard for AI management systems. These frameworks aren't just compliance exercises — they're blueprints for building trustworthy AI-powered products.

Why external oversight matters

With AI embedded into every layer of product development, many organizations assume they can "figure it out as they go." But rapid adoption often outpaces security and governance — a gap seen in the IBM Cost of a Data Breach Report, which highlighted that ungoverned AI systems face higher breach costs and lengthy recovery timelines.

This is where specialist partners add value — not to slow teams down, but to create the guardrails that let them move fast with confidence:

- Independent oversight of where and how AI should be used

- Proven architectural patterns for secure, scalable products

- Blueprinted governance that prevents "shadow AI" risks — the unapproved, untracked use of AI tools that can leak data or bypass compliance

- Security best practices embedded into workflows

- True accountability as teams scale

When speed is the goal, external expertise becomes the safety net that ensures velocity doesn't come at the expense of control.

Looking ahead

The web in 2026 will be faster, leaner, and more automated than ever before — and more exposed to systemic risk if poorly governed.

AI is no longer the differentiator. How teams design workflows, secure systems, and share accountability is.

For organisations building digital products this year, success will not come from chasing tools — but from earning confidence in the systems they create and the partners they trust.

The question isn't whether to use AI — it's whether you've got the guardrails in place before you hit deploy.

References

- McKinsey — The State of AI

- GitHub — Copilot Productivity Research

- DORA — State of DevOps Research

- Checkmarx — Shai-Hulud Worm Analysis

- Cybersecurity Ventures — 2025 Almanac

- eSecurity Planet — Shadow AI and Data Leakage

- NIST AI Risk Management Framework

- IBM — Cost of a Data Breach Report

- Microsoft 365 Copilot Zero-Click Vulnerability

- Veracode — AI-Generated Code Security Risks